A few months back

In the smoke-filled (virtual) room of the council of the high (from the smoke) elves, the wizened textualist Geoff, said “All of my stuff is in boxes and containers.” Empty shelves behind him indicated he was moving house.

“When you have a complex module,” Geoff continued, “and its difficult to describe how to install it, do all the steps in a container, and show the Dockerfile.”

“Aha!” said the newest member, who drew his vorpal sword, and went forth to slay the Jabberwock, aka putting RakuAST::RakuDoc::Render into a container.

“Beware the Jabberwock, my son!

The jaws that bite, the claws that catch!”

After days of wandering the murky jungle of Docker/Alpine/Github/Raku documentation, the baffled elf wondered if he was in another fantasy:

“So many rabbit holes to fall down.”

Rabbit Hole, the First – alpinists beware

Best practice for a container is to chose an appropriate base image. Well obviously, there’s the latest Raku version by the friendly, hard-working gnomes at Rakuland. So, here’s the first attempt at a Dockerfile:

FROM docker.io/rakuland/raku

# Copy in Raku source code and build

RUN mkdir -p /opt/rakuast-rakudoc-render

COPY . /opt/rakuast-rakudoc-render

WORKDIR /opt/rakuast-rakudoc-render

RUN zef install . -/precompile-installIt failed. After peering at the failure message, it seemed that at least one of the dependent modules used by the rakuast-rakudoc-render distribution needs a version of make.

That’s easily fixed, just add in build-essentials, the vorpal-sworded elf thought. Something like:

FROM docker.io/rakuland/raku

# Install make, gcc, etc.

RUN apt-get update -y && \

apt-get install -y build-essential && \

apt-get purge -y

# Copy in Raku source code and build

RUN mkdir -p /opt/rakuast-rakudoc-render

COPY . /opt/rakuast-rakudoc-render

WORKDIR /opt/rakuast-rakudoc-render

RUN zef install . -/precompile-installFailure! No apt.

“How can there not be APT??” the Ubuntu-using elf thought in shock. Turns out that the rakuland/raku image is built on an Alpine base, and Alpine have their own package manager apk.

Unfortunately, build-essential is a debian package, but at the bottom of this rabbit hole lurks an apk equivalent package build-base, leading to:

FROM docker.io/rakuland/raku

# Install make, gcc, etc.

RUN apk add build-base

# Copy in Raku source code and build

RUN mkdir -p /opt/rakuast-rakudoc-render

COPY . /opt/rakuast-rakudoc-render

WORKDIR /opt/rakuast-rakudoc-render

RUN zef install . -/precompile-installLo! upon using the Podman desktop to build an image from the Dockerfile, the process came to a succesful end.

But now to make things easier, there needs to be a link to the utility RenderDocs, which takes all the RakuDoc sources from docs/ and renders them to $*CWD (unless over-ridden by --src or --to, respectively). It will also render to Markdownunless an alternative format is given.

FROM docker.io/rakuland/raku

# Install make, gcc, etc.

RUN apk add build-base

# Copy in Raku source code and build

RUN mkdir -p /opt/rakuast-rakudoc-render

COPY . /opt/rakuast-rakudoc-render

WORKDIR /opt/rakuast-rakudoc-render

RUN zef install . -/precompile-install

# symlink executable to location on PATH

RUN ln -s /opt/rakuast-rakudoc-render/bin/RenderDocs /usr/local/bin/RenderDocs

# Directory where users will mount their documents

RUN mkdir /doc

# Directory where rendered files go

RUN mkdir /to

WORKDIR /AND!!! when a container was created using this Dockerfile and run with its own terminal, the utility RenderDocs was visible. Running

RenderDocs -hproduced the expected output (listing all the possible arguments).

Since the entire distribution is included in the container, running

RenderDocs --src=/opt/rakuast-rakudoc-render/docs READMEwill render README.rakudoc in --src to /to/README.md because the default output format is MarkDown.

“Fab!”, screamed the boomer-generation newbie elf. “It worked”.

“Now lets try HTML”, he thought.

RenderDocs --format=HTML --src=/opt/rakuast-rakudoc-render/docs README

Failure: no sass.

“expletive deleted“, he sighed. “The Jabberwok is not dead!”

There are two renderers for creating HTML. One produces a single file with minimal CSS so that a normal browser can load it as a file locally and it can be rendered without any internet connection. This renderer is triggered using the option --single. Which the containerised RenderDocs handles without problem.

Rabbit Hole, the second – architecture problems

But the normal use case is for HTML to be online, using a CSS framework and JS libraries from CDN sources. Since the renderer is more generic, it needs to handle custom CSS in the form of SCSS. This functionality is provided by calling an external program sass, which is missing in the container.

An internet search yields the following snippet for a container.

# install a SASS compiler

ARG DART_SASS_VERSION=1.82.0

ARG DART_SASS_TAR=dart-sass-${DART_SASS_VERSION}-linux-x64.tar.gz

ARG DART_SASS_URL=https://github.com/sass/dart-sass/releases/download/${DART_SASS_VERSION}/${DART_SASS_TAR}

ADD ${DART_SASS_URL} /opt/

RUN cd /opt/ && tar -xzf ${DART_SASS_TAR} && rm ${DART_SASS_TAR}

RUN ln -s /opt/dart-sass/sass /usr/local/bin/sassThe container image builds nicely, but the RenderDocs command STILL chokes with an unavailable sass.

Except that diving into the container’s murky depths with an ls /opt/dart-sass/ shows that sass exists!

The newbie was stumped.

So rested he by the Tumtum tree

And stood awhile in thought.

Turns out that the Alpine distribution uses a different compiler, and the wonderful dart-sass fae provide a suitable binary so a simple change was enough to get sass working in the container.

- ARG DART_SASS_TAR=dart-sass-${DART_SASS_VERSION}-linux-x64.tar.gz

+ ARG DART_SASS_TAR=dart-sass-${DART_SASS_VERSION}-linux-x64-musl.tar.gzsimple does not mean found at once, but the container contains RenderDocs, which produces markdown and HTML rendered files.

One, two! One, two! And through and through

The vorpal blade went snicker-snack!

He left it dead, and with its head

He went galumphing back.

“I can publish this image so everyone can use it,” the FOSS fanatic elf proclaimed.

So the docker container image can be accessed using a FROM or PULL using the URL

docker.io/finanalyst/rakuast-rakudoc-renderRabbit Hole, the third – Versions

“And hast thou slain the Jabberwock?

Come to my arms, my beamish boy!

O frabjous day! Callooh! Callay!”

“It would be great,” mused the triumphant elf, “if RakuDoc sources, say for a README could be automatically included as the github README.md of repo”.

“May be as an action?”

Github actions can use containers to process files in a repo. Essentially, in an action, the contents of a repo are copied to a github-workspace, then they can be processed in the webspace, and changes to the workspace have to be committed and pushed back to the repository.

With a container, the contents of the workspace need to be made available to the container. Despite some documentation that starting a container in a github action automatically maps the github-workspace to some container directory, the exact syntax is not clear.

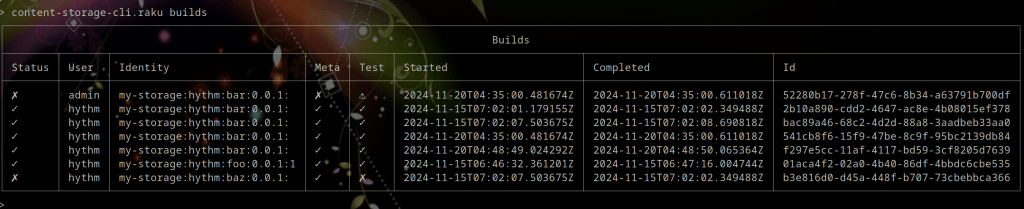

In order to discover how to deal with the multitude of possibilities, a new version of RenderDocs got written, and a new image generated, and again, and again … Unsurprisingly, between one meal and another, the ever hungry elf forgot which version was being tested.

“I’ll just include a --version argument,” thought the elf. “I’ll can ask the Super Orcs!”.

And there behold was an SO answer to a similar question, and it was written by no lesser a high elf than the zefish package manager ugexe, not to be confused with the other Saint Nick, the package deliverer.

Mindlessly copying the spell fragment into his CLI script as:

multi sub MAIN( :version($v)! = False {

say "Using version {$?DISTRIBUTION.meta<version>} of rakuast-rakudoc-render distribution."

if $v;

}the elf thought all done! “Callooh! Callay!”.

Except RenderDocs -v generated Any.

“SSSSSSSSh-,” the elf saw the ominous shadow of Father Christmas looming, “-ine a light on me”.

On the IRC channel, the strong willed Coke pointed out that a script does not have a compile time variable, such as, $?DISTRIBUTION. Only a module does.

The all-knowing elf wizard @lizmat pointed out that command line scripts should be as short as possible, with the code in a module that exports a &MAIN.

Imbibing this wisdom, our protagonist copied the entire script contents of bin/RenderDocs to lib/RenderDocs.rakumod, added a proto sub MAIN(|) is export { {*} }, then made a short command line script with just use RenderDocs.

Inside the container terminal:

# RenderDocs -v

Using version 0.20.0 of rakuast-rakudoc-render distribution.With that last magical idiom, our intrepid elf was ported from one rabbit hole back to the one he had just fallen down.

Rabbit Hole, the fourth – Actions

“Beware the Jubjub bird, and shun

The frumious Bandersnatch!”

“I seem to be going backwards,” our re-ported elf sighed.

Once again, the github documentation was read. After much study and heartbreak, our hero discovered a sequence that worked:

- Place Lavendar and sandalwood essential oils in a fragrance disperser

- Prepare a cup of pour over single-origin coffee with a spoon of honey, and cream

- In a github repo create a directory

docscontaining a fileREADME.rakudoc - In the same github repo create the structure

.github/

workflows/

CreateDocs.yml- Write the following content to

CreateDocs.yml

name: RakuDoc to MD

on:

# Runs on pushes targeting the main branch

push:

branches: ["main"]

# Allows you to run this workflow manually from the

# Actions tab workflow_dispatch:

jobs:

container-job:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@master

with:

persist-credentials: false

fetch-depth: 0

- name: Render docs/sources

uses: addnab/docker-run-action@v3

with:

image: finanalyst/rakuast-rakudoc-render:latest

registry: docker.io

options: -v ${{github.workspace}}/docs:/docs -v ${{github.workspace}}:/to

run: RenderDocsAfter examining the github actions logs, it seemed the rendered files were created, but the repository was not changed.

“Perhaps I should have used milk and not cream …” thought our fantasy elf.

There is in fact a missing step, committing and pushing from the github-workspace back to the repository. This can be done by adding the following to CreateDocs.yml:

- name: Commit and Push changes

uses: Andro999b/push@v1.3

with:

github_token: ${{ secrets.GITHUB_TOKEN }}

branch: 'main'Even this did not work! Github refused absolutely to write changes to the repository.

The weary elf substituted Lemon grass for Lavender in step 1, and just to be certain changed the repo settings following the instructions from the Github grimore

- select Settings in the repo’s main page

- select Actions then General

- from the dropdown for GITHUB_TOKEN, select the one for read and write access.

- Save settings

The content – at this stage of the tale – of CreateDocs.yml is

name: RakuDoc to MD

on:

# Runs on pushes targeting the main branch

push:

branches: ["main"]

# Allows you to run this workflow manually from the Actions tab

workflow_dispatch:

jobs:

container-job:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@master

with:

persist-credentials: false

fetch-depth: 0

- name: Render docs/sources

uses: addnab/docker-run-action@v3

with:

image: finanalyst/rakuast-rakudoc-render:latest

registry: docker.io

options: -v ${{github.workspace}}/docs:/docs -v ${{github.workspace}}:/to

run: RenderDocs

- name: Commit and Push changes

uses: Andro999b/push@v1.3

with:

github_token: ${{ secrets.GITHUB_TOKEN }}

branch: 'main'It worked. “The Christmas present is now available for anyone who wants it”, thought our elf.

’Twas brillig, and the slithy toves

Did gyre and gimble in the wabe:

All mimsy were the borogoves,

And the mome raths outgrabe.

(Jabberwocky, By Lewis Carroll)

Remember to git pull for the rendered sources to appear locally as well.

Rabbit Hole, the fifth – Diagrams

“Wouldn’t it be nice to wrap the present in a ribbon? Why not put diagrams in the Markdown file? “

Our elf was on a streak, and fell down another rabbit hole: github does not allow svg in a Markdown file it renders from the repo. “It is impossible,” sighed the tired elf.

Alice laughed. “There’s no use trying,” she said: “one can’t believe impossible things.”

“I daresay you haven’t had much practice,” said the Queen. “When I was your age, I always did it for half-an-hour a day. Why, sometimes I’ve believed as many as six impossible things before breakfast.”

(Through the Looking Glass, Lewis Carroll)

Diagrams can be created using the dot program of Graphviz, which is a package that Alpine provides. So, we can create a custom block for RakuAST::RakuDoc::Render that takes a description of a graph, sends it to dot, gets an svg file back and inserts into the output.

Except: github will not allow svg directly in a markdown file for security reasons.

But: it will allow an svg in a file that is an asset on the repo. So, now all that is needed is to save the svg in a file, reference the file in the text, and copy the asset to the same directory as the Markdown text.

Except: the time stamps on the RakuDoc source files and the output files seem to be the same because of the multiple copying from the repo to the actions workspace to the docker container. So: add a --force parameter to RenderDocs.

So in Raku impossible things are just difficult.

The final content of CreateDocs.yml is now

name: RakuDoc to MD

on:

# Runs on pushes targeting the main branch

push:

branches: ["main"]

# Allows you to run this workflow manually from the Actions tab

workflow_dispatch:

jobs:

container-job:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@master

with:

persist-credentials: false

fetch-depth: 0

- name: Render docs/sources

uses: addnab/docker-run-action@v3

with:

image: finanalyst/rakuast-rakudoc-render:latest

registry: docker.io

options: -v ${{github.workspace}}/docs:/docs -v ${{github.workspace}}:/to

run: RenderDocs --src=/docs --to=/to --force

- name: Commit and Push changes

uses: Andro999b/push@v1.3

with:

github_token: ${{ secrets.GITHUB_TOKEN }}

branch: 'main'Try adding a graph to a docs/README.rakudoc in a repo, for instance:

=begin Graphviz :headlevel(2) :caption<Simple example>

digraph G {

main -> parse -> execute;

main -> init;

main -> cleanup;

execute -> make_string;

execute -> printf

init -> make_string;

main -> printf;

execute -> compare;

}

=end Graphviz

Now you will have a README with an automatic Table of Contents, all the possibilities of RakuDoc v2, and an index at the end (if you indexed any items using X<> markup).

(Sigh: All presents leave wrapping paper! A small file called semicolon_delimited_script is also pushed by github’s Commit and Push to the repo.)